Artificial Intelligence used to be an imaginative concept that only came to life in sci-fi movies, now it’s approaching our reality fast. Since A.I. has already integrated into our world, schools like Palomar have to figure out how to deal with it in their policies.

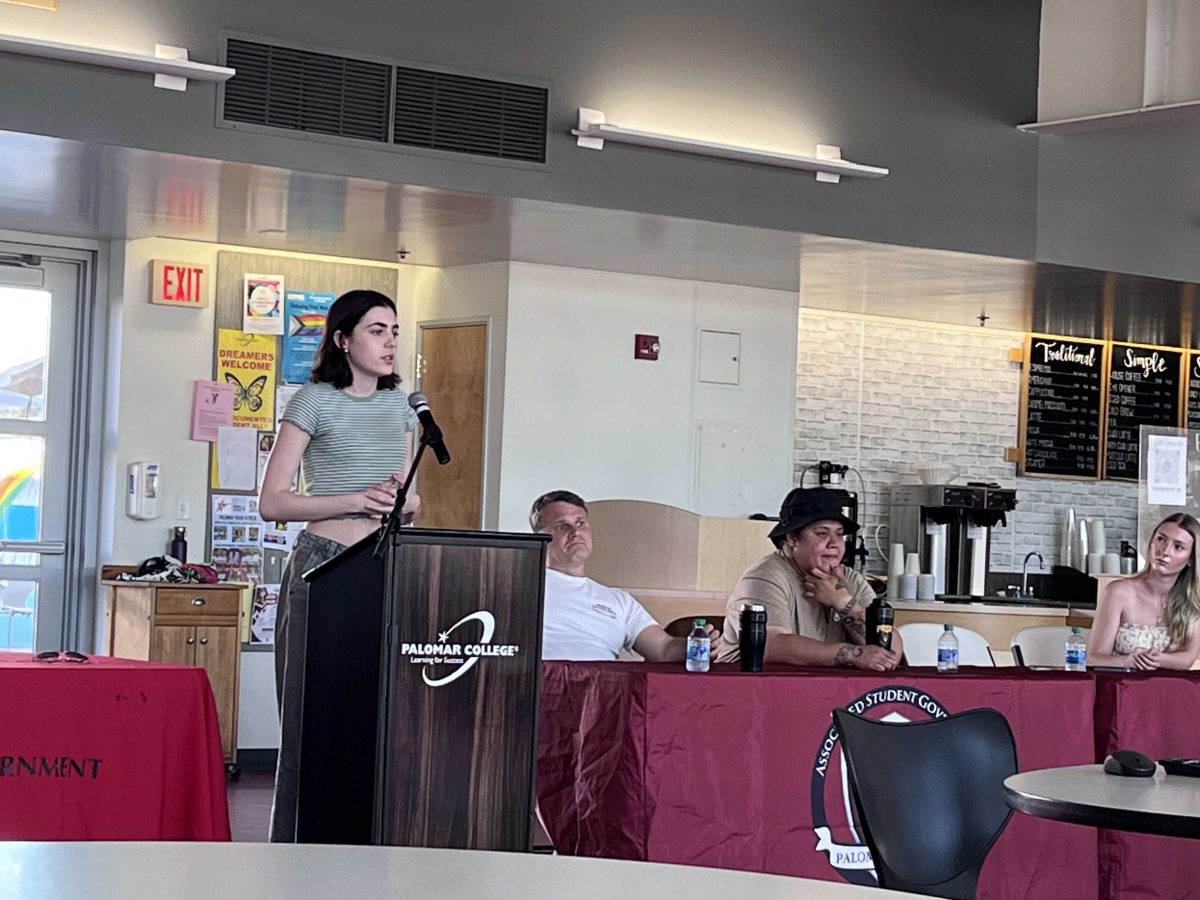

To combat this change, an Academic Integrity Task Force was formed to start dealing with this issue. The task force chair, Katy Farrell, shared what Palomar is preparing for regarding generative A.I. in education.

Katy Farrell is a librarian and a professor. She has worked at Palomar for over 17 years and currently manages the Escondido Center library. The Academic Integrity Task Force (AITF) is getting the “behind-the-scenes work done right now” to approach academic dishonesty and generative A.I., according to Farrell. There are 10 members of the AITF: Farrell, other course instructors, an administrator, the dean, the current head of student life & leadership, and a student voice.

Farrell shared in an interview that administrators can see the benefits of generative A.I. “There are some really good uses of generative A.I.… It’s just another tool right, another form of technology. The pencil was a tool, the typewriter was a tool,” she said.

She also shared how A.I. can be a form of a tutor. “Just like you can’t have a tutor write your paper for you, you can’t have A.I. write the paper for you,” she said.

She clarified that academic dishonesty has been around a lot longer than A.I. or Chat GPT, and teachers have seen all types of academic dishonesty. Cheating isn’t something new.

Most professors can tell when a student doesn’t use their own words. She noticed in the spring of 2023 that students would use Chat GPT to summarize sources. “It was pretty obvious… A.I. content tends to be very artificial, it doesn’t have a soul,” she said.

As of right now, Palomar has certain A.I. detection tools in place but they are not 100% accurate. Professors are told to talk to the students personally if they have any suspicions of plagiarism.

Appropriate A.I. usage is dependent on the individual courses. Specifically, AITF sees the usage of A.I. educationally as an issue that is subjective to each professor.

“Our message to instructors is to make it clear how students may or may not use generative A.I., and our message to students is to make sure you understand… and if you’re not sure, always ask your instructor,” Farrell said.

She expressed that it is hard to say what constitutes cheating or academic dishonesty regarding A.I. “There are some really good uses of generative A.I.… You must acknowledge when you use A.I. the same way you do when you cite sources,” she said. However, the AITF expects that there will be unethical uses of A.I., so that’s why they came together.

Farrell shared that the AITF has three goals: to review old policies, support faculty with upholding academic integrity, and make new transparent policies to help promote academic integrity.

There are no guidelines in Palomar’s policies that directly address A.I. usage. She explained that Palomar’s administrative policies are very broad and general. AITF is working on defining the definitions of using A.I. in an educational setting right now. They started working on this matter in the spring of 2023 and will finish up in May 2024.